AI systems don’t develop bias on their own—it comes straight from their training data. Flawed or biased training data will make AI models produce flawed and biased results. Even the smallest biases in datasets can affect generative AI outputs and create unfair outcomes for users.

Data bias in AI creates widespread problems with alarming results. AI writing tools that learn mostly from male-authored content tend to push gender stereotypes. The Gender Shades project revealed major gaps in commercial gender classification systems. Dark-skinned females faced the highest error rates. Healthcare shows even more dangerous outcomes. Black patients are three times more likely to have undetected occult hypoxemia from pulse oximeters compared to white patients.

The root of AI bias lies in what your training data leaves out. Historical bias shows up in society’s patterns. Lack of diversity creates representation problems. Large language models pick up biases from websites and books. These hidden patterns shape how AI behaves in unexpected ways. This piece gets into why AI bias happens, looks at different types of bias, and shows practical ways to spot and reduce these problems before they disrupt your AI systems.

Why Training Data is the Root of AI Bias

Image Source:Red Nucleus

Training data forms the foundation that AI models use to understand the world. The way these models work fairly or show harmful biases depends on their data’s quality, variety, and how well it represents reality.

How generative models learn from data patterns

Generative AI models work by finding and encoding patterns in huge datasets. These models first analyze how existing data connects and structures itself. They use this knowledge to create new content that follows these patterns. Unlike old-school algorithms that follow strict programming rules, generative models use advanced deep learning algorithms that work like a human brain learns and decides.

These models can’t tell truth from fiction—they just predict what comes next based on patterns they’ve seen. They create content that sounds right rather than checking if it’s accurate. This basic limit explains why AI can sound human-like but still make factual mistakes or show bias.

These models also use random elements to create different outputs from similar inputs. This makes conversations feel more natural but adds uncertainty to how biases might show up.

Bias in artificial intelligence from flawed datasets

AI systems get their bias mainly from training data that has built-in errors or strays from true values. AI systems face several types of data bias:

- Sampling bias: This happens when training data comes from groups that don’t match the actual users. To cite an instance, see an algorithm that predicted kidney problems using data mostly from older non-Black men (over 90% male, average age 62). It didn’t work well for younger female patients.

- Measurement bias: This shows up when data collection favors certain groups too much or too little. Common medical tools like pulse oximeters give wrong oxygen readings for non-White patients, which leads to dangerous healthcare gaps.

- Representation bias: This occurs when datasets lack variety. Face recognition systems trained mostly on lighter-skinned people make mistakes by a lot more often with darker-skinned people, especially women of color—getting it wrong for more than one in three women with darker skin.

- Historical bias: Past social inequalities live on in the data. AI hiring tools that learn from old employment data might keep old gender or racial gaps going in top jobs.

The problem gets worse because bias can come from many places at once. Bad outcomes happen when human, system, and computer biases mix without clear rules to handle these risks.

Examples of skewed outputs from biased training sets

Biased training data causes real-life problems in many fields:

Healthcare systems diagnose African-American patients less accurately than white patients because the training data doesn’t include enough diversity. AI prediction tools that use pulse oximeter readings might make health gaps worse by misreading oxygen levels in Black patients.

AI resume screeners can unfairly filter out good candidates based on gender. Job ads with words like “ninja” attract more men than women, even though such words have nothing to do with the actual job.

AI image generators also show troubling bias. Bloomberg looked at over 5,000 AI-made images and found that “the world according to Stable Diffusion is run by white male CEOs. Women are rarely doctors, lawyers or judges. Men with dark skin commit crimes, while women with dark skin flip burgers”. Midjourney’s research showed that AI-generated images of specialized jobs included both young and old people, but made all older people men.

These cases show why good training data matters so much. Small biases in datasets can grow huge when AI systems process them, potentially harming millions through unfair or harmful results.

Invisible Biases Introduced by Data Workers

Human data workers shape how AI systems behave through countless hidden decisions. These workers – annotators, transcribers, and labelers – embed their personal views into datasets. Their choices often go undocumented and unexplored.

Scenario: Cultural bias from underrepresented annotators

Technical teams’ demographics directly affect AI development results. Google’s 2020 report showed women held just 23.6% of global technical positions, up from 16.6% in 2014, yet nowhere near equal representation. Black and Latinx women make up less than 1.5% of Silicon Valley’s leadership roles. Researchers say this lack of diversity creates a “feedback loop” where algorithms mirror their creators’ experiences and backgrounds.

Scenario: Ambiguous labeling in classification tasks

AI errors often stem from unclear data annotation instructions. Datasets end up with inconsistencies when task guidelines lack precision or allow room to interpret. This issue becomes particularly noticeable with subjective labels such as sentiment categories or facial expressions that change meaning in different cultures. Yes, it is common that training methods make simple assumptions about ground-truth label distribution by assuming each case has one correct “hard label”.

Scenario: Reinforcement of stereotypes in transcription

Language processing systems absorb and increase harmful stereotypes from their training data. Researchers tested this by creating matched sentences – one with stereotypical views about social groups and another with “anti-stereotypical” versions. Three popular language models consistently rated stereotyped sentences as more typical than their counterparts. The models that scored highest on standard tests actually used stereotypes most extensively.

Scenario: Sentiment mislabeling due to personal views

Personal bias affects sentiment analysis systems significantly. Studies show at least 5% of questions asked to digital assistants contain sexually explicit content. The way human labelers react to this content – either neutrally or negatively – determines how AI systems handle harassment later. AI works as a “powerful socialization tool.” Its programmed responses to offensive comments can encourage or discourage similar real-world behavior. Content moderation faces this challenge particularly, as biased annotation methods affect toxicity detection and unfairly target certain ways of speaking.

Technical solutions get most of the attention, but they cannot fix bias alone without addressing the human aspect of data creation.

How Bias in Training Data Affects AI Outputs

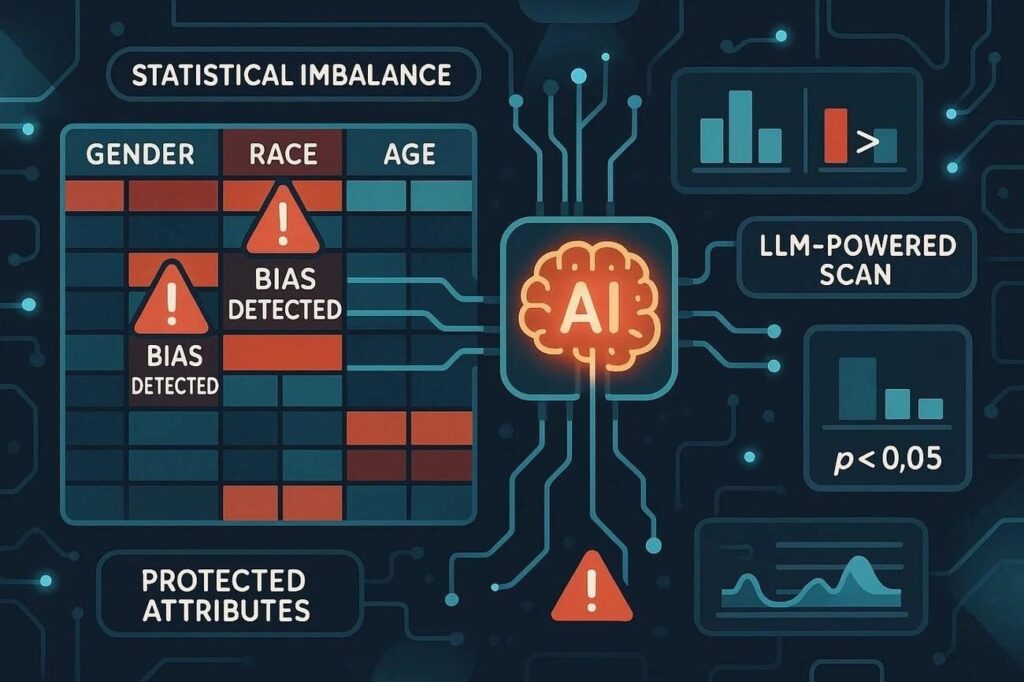

Image Source:AWS in Plain English

The trip from biased training data to problematic AI outputs takes a predictable but troubling path. AI systems absorb flawed or unrepresentative information that creates ground harm in ways beyond simple persistence.

Learning biased associations during model training

AI systems absorb biases through pattern recognition rather than conscious prejudice. Models analyze relationships within data statistically and form connections between concepts that show both legitimate correlations and society’s prejudices. Statistical bias happens when training data’s distribution fails to represent the true population distribution. Social bias goes beyond statistical variance and leads to poor outcomes for specific demographic groups.

Models learn to prioritize patterns from majority groups in their training data. To cite an instance, a model trained on a dataset with 80% healthy and 20% diseased medical images could achieve 80% accuracy by predicting everything as healthy. These systems can’t identify which correlations show legitimate patterns versus harmful stereotypes—they just reproduce what they observe.

Amplification of stereotypes in generative outputs

Subtle biases in training data become magnified through AI systems. This amplification effect reshapes barely noticeable patterns into obvious problematic outputs. A 2023 analysis of over 5,000 images created with Stable Diffusion showed amplification of both gender and racial stereotypes.

Generative models don’t just copy existing biases—they make them worse. Studies of Large Language Models (LLMs) showed that open-source models like Llama 2 created stories about men with words like “treasure,” “woods,” and “adventurous.” Stories about women featured words like “garden,” “love,” and “husband”. These models described women in domestic roles four times more than men.

Emergence of new biases not present in original data

AI systems sometimes create entirely new biases absent from their original training data. This happens through “bias emergence”—creating novel prejudicial associations through pattern combinations. Generative AI models learn patterns from data and may create associations not present in the training data but aligned with learned biases.

Generative models would still produce potentially inaccurate content by combining patterns unexpectedly. The generative process adds randomness and variability that causes unpredictable bias demonstrations even with similar inputs.

These mechanisms’ collateral damage spreads in any discipline—from healthcare algorithms that underestimate disease severity in underrepresented populations to image generators that perpetuate harmful stereotypes. AI tools in predictive policing reinforce existing patterns of racial profiling by using historical arrest data. Understanding bias’s origin, transformation, and intensification throughout the machine learning pipeline helps address these challenges.

Making Training Data Explainable and Transparent

AI developers need to expose their training data practices to address hidden bias. Transparency is the life-blood of ethical AI development. Research shows a concerning trend – more than 70% of licenses for popular datasets don’t have proper specifications. This lack of clarity creates potential risks for AI developers, both legally and ethically.

Tracking data sources and worker demographics

The quickest way to reduce bias starts with detailed data provenance tracking. The process has documentation of dataset sources, licenses, creator identity, and how people use them. Teams that trace their data lineage can select the right data confidently. This approach has reduced unspecified licenses from 72% to 30%.

Worker demographics shape annotation quality in crucial ways. Research reveals AI users tend to be younger (73% under 50), better educated (51% have bachelor’s degrees), and urban dwellers (29%). These patterns can skew dataset creation toward certain worldviews without anyone realizing it.

Documenting labeling decisions and processes

Good documentation must cover:

- Labeling methodologies and criteria

- Annotation instructions provided to workers

- Processing decisions such as filtering or anonymization

- Tokenization methods that could introduce linguistic biases

These records help teams spot how human judgment might affect model outputs. Teams can’t determine if datasets contain personal data needing GDPR compliance or harmful content without clear documentation.

Auditing datasets for representation gaps

Dataset audits reveal some hard truths about representation. Current training data shows a clear Western-centric bias. Even Global South languages come mostly from North American or European creators. Regular auditing helps teams spot five main sources of bias: data gaps, demographic sameness, false correlations, wrong comparisons, and cognitive biases.

Tools like IBM’s AI Fairness 360 or Google’s What-If Tool help identify representation gaps. In spite of that, human review remains vital since automated tools can’t fully grasp contextual nuances affecting fairness. Making training data transparent serves both ethical needs and business goals – teams can fix issues, boost performance, and build trust with stakeholders.

Bias Mitigation Strategies for AI Developers

AI bias mitigation works best when teams understand they can address biases at any point in a model’s lifecycle – from initial data gathering to final deployment and beyond.

Data resampling and augmentation techniques

Smart resampling strategies help fix imbalanced datasets. Teams can increase minority group representation through oversampling by creating duplicate or synthetic examples. Undersampling reduces majority class samples to balance data distribution. Synthetic Minority Over-sampling Technique (SMOTE) combines these approaches. It generates synthetic samples for underrepresented groups and removes instances from overrepresented ones. These techniques pack quite a punch but come with limitations. SMOTE might create unrealistic data points through interpolation that lead to misleading patterns.

Feature weighting to reduce bias effect

Feature importance adjustments help neutralize unwanted relationships in data. Models pay more attention to underrepresented groups when reweighting assigns higher numerical values to minority class samples. Teams can remove problematic variables, swap biased features with neutral ones, or add new variables to offset existing prejudices. These methods prevent models from depending too much on attributes that relate to protected characteristics.

Continuous monitoring of model outputs post-deployment

Models need constant watching as they process ground data. Automatic retraining kicks in when performance drops below set thresholds. Regular bias audits should check outcomes across different demographic groups to spot new disparities. Models updated with fresh data provide the most reliable defense against developing biases.

Conclusion

AI systems need a complete approach throughout the machine learning lifecycle to deal with hidden bias. Bias starts with training data, and this fact changes the way we should develop and implement AI. These biases don’t just stick around when nobody checks them—they grow stronger during model training and create stereotypes that harm marginalized groups.

Biased AI’s effects are way beyond technical measurements and affect real people in healthcare, employment, and many other areas. Small gaps in dataset representation can grow exponentially through machine learning systems and end up affecting millions through unfair outputs.

Data transparency remains the key step toward ethical AI. Documentation of data sources, worker demographics, labeling decisions, and processing methods is now crucial, not optional. This documentation helps you spot potential bias sources before they show up in your models.

There are clear ways to fix these issues. Data resampling techniques help balance group representation, while feature weighting reduces problematic variables’ impact. On top of that, constant monitoring after deployment catches new biases that might not show up in original testing.

Fair AI systems start when you recognize what your training data leaves out. Technical solutions are important but should work alongside human judgment and ethical thinking. Building unbiased AI needs diverse teams, clear processes, and constant alertness. You can learn about emerging techniques and best practices in ethical AI development through our newsletter.

Humans make the decisions that create fair, unbiased AI—what data to collect, how to process it, and which safeguards to use. As AI becomes more embedded in critical systems, your steadfast dedication to addressing hidden bias will determine if these technologies serve everyone equally.